Image Credit: https://www.itsupportsingapore.sg/ / Edited with Photoshop

Artificial intelligence (AI) is more than just a favorite storyline for Sci-Fi movies. Although Sci-Fi films portray artificial intelligence (AI) as robots with human-like abilities and characteristics, AI is more than just that. It actually encompasses anything from IBM's Watson and Google's search algorithm to autonomous weapons. Some of the most popular technology using AI are iPhone's Siri and Tesla's self-driving car.

Image Credit: https://www.theverge.com/

AI today is categorized as Narrow (weak) AI and general (strong) AI. Narrow AI pertains to machines that are designed to perform very specific tasks, such as facial recognition, self-driving cars, and internet searches. They are very effective in their given tasks, but will not function properly if any condition is changed. General AI (AGI), on the other hand, can potentially perform any intellectual task that humans can - maybe even more effectively. While it's possible for narrow AIs to outperform humans in their given tasks, the long-term goal of researchers is to create an AGI which can potentially outperform humans at almost every cognitive task. But, this is also where the fear is coming from.

Questions like 'will a super intelligent AI come to life and enslave the human race?' and 'will terminators come to life to wipe out human existence from the face of the earth?'

While these are great storylines for movies, they are hardly true. They may or may not exist, we can never be really sure, as even researchers are conflicted on this.

Fear of the Superhuman AI

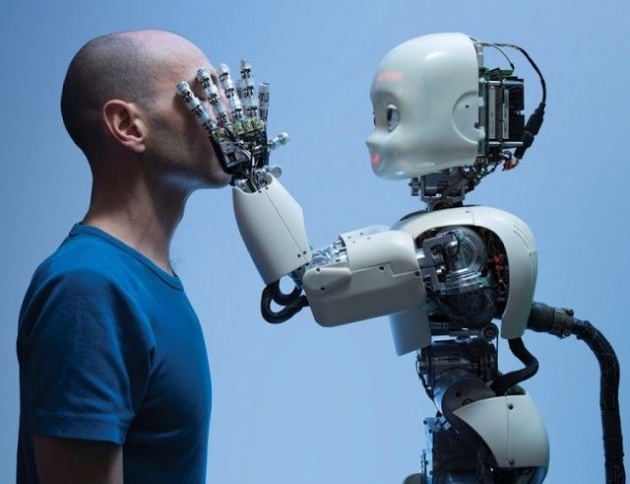

Scary robots bearing equally scary-looking weapons is something you'd expect seeing from movies like 'Terminator'. But, is that possible in real life - robots taking arms, going against, and killing humans that created them? Hardly. No such thing exist and probably never will - at least not in this century. So, we'll never really know when it becomes a reality.

Image CRedit: https://www.moneycontrol.com/

Robots turning evil is a baseless hypothesis. Even if a machine run by AI does end up hurting humans in one way or another, it's not because they did it intentionally. Robots have no ability to subjectively act on anything. They also can't feel hate - or any emotions for that matter. To be evil, you need to have consciousness, which robots don't have. They can only objectively respond to outside stimuli with actions programmed into their system.

Image Credit: https://swisscognitive.ch/

However, machines can have goals which they must achieve based on their program. If humans get in the way and get hurt, it's not because machines hate humans. They are just simply in the way. It's pretty much like how we treat anthills when constructing structures. It's not that we hate ants, they're just simply in the way.

Of course, this is what the beneficial AI movement wants to avoid - placing humanity in the same position as those ants.

How Can AI be Dangerous?

Image Credit: https://medium.com/@montouche/

Many researchers agree that a superintelligent AI - if one ever exists - will unlikely be able to exhibit human-like emotions, such as love or hate. So, there's no reason to expect that AIs can be intentionally malevolent or benevolent. Machines can be coded to recognize emotions and respond to them accordingly, but it's impossible to program machines to have emotions. However, that doesn't mean AI machines cannot potentially be dangerous. Here are two scenarios where experts think AIs might become a risk.

- The AI is intentionally programmed to do something devastating. Autonomous weapons are AI systems that are programmed to kill. In the hands of the wrong people, these weapons have the potential to cause mass destruction and kill thousands of people. The enemy could also program these weapons to be extremely difficult to turn off, which makes it even harder to control. It could also start as a narrow AI, but could grow as its level of AI intelligence also grows in autonomy.

- The AI is designed to do something beneficial but ended up developing a destructive method to achieve its goal. You've probably seen this in movies and, yes, this is something that can potentially happen in real life. To avoid this, we must carefully align our goals with that of the AI's - a strikingly difficult task if truth be told. A good example of such a thing happening is if you ask as a self-driving car to take you somewhere as fast as possible. It might break all the rules just to do as you commanded. So, in a way, it did what you asked. Not just the way you probably would've wanted it to happen, though.

These scenarios and examples help illustrate that advanced AI is not actually capable of malevolence on its own. The two scenarios tell us that AI can cause harm if it was intentionally programmed that way or if its goals aren't properly aligned with ours.

Importance of AI Safety

Image Credit: https://www.kaspersky.com/

AI is a technology that is on a continuous rise as more and more organizations are embracing it. So, the impact of AI on society, from law and economics to technical topics like validity, verification, control, and security, is a rather large one. Aside from that, technology is not invulnerable to outside attacks. It's annoying if your laptop breaks down, but it's just a little inconvenience when compared to AI-controlled machines, such as cars, airplanes, and your pacemaker, breaking down. Some rogue developers may even embed a trojan inside the system where it will do malicious acts on the system. Like for example data mining, which has a huge impact on consumers' and even a nation's security. Take, for example, the recent fiasco with the tech giant, Huawei, where the US government is accusing them of collecting information secretly with their devices. They may or may not be using AI to do this, but the fact remains that technology is at the heart of this accusation.

This is the reason why we should be concerned about the short and long-term effects of AI on a security level, as they have a bigger potential of causing more devastating results. An important question that must be addressed is what happens if the quest for a strong AI succeeds and it becomes better than human beings at all cognitive tasks? These are just some of the reasons why AI safety has become a popular topic lately.

Here's Maggie, our resident Querlo chatbot assistant to help us understand why AI safety research is now more important than ever.

Image Credit: Chineyes via bitLanders

Here's a video from Computerphile explaining the importance of AI safety.

Video Credit: Computerphile via Youtube

On a Final Note

AIs are not inherently evil, but they could be in the hands of the wrong people - and this is what we are trying to prevent. Additionally, machines being machines have the potential to go haywire. They are still man-made, after all - and humans are not perfect. I've never seen software that worked exactly as it was intended to be. Trust me. I'm an IT professional. That's why it's never too early to start with AI safety research.

~~oO0Oo~~oO0Oo~~oO0Oo~~

Thanks for reading! Have a wonderful day ahead of you and keep smiling. :)

Written by Chineyes for bitLanders

For more quality blog posts, you may visit my page

Not yet on bitLanders? Sign up now and be rewarded for sharing ideas, photos, and videos!