Computer science is the scientific and practical approach to computation and its applications. It is the systematic study of the feasibility, structure, expression, and mechanization of the methodical procedures (or algorithms) that underlie the acquisition, representation, processing, storage, communication of, and access toinformation, whether such information is encoded as bits in a computer memory or transcribed in genes and protein structures in a biological cell. An alternate, more succinct definition of computer science is the study of automating algorithmic processes that scale. A computer scientist specializes in the theory of computation and the design of computational systems.

Charles Babbage is credited with inventing the first mechanical computer.

Charles Babbage is credited with inventing the first mechanical computer.

Its subfields can be divided into a variety of theoretical and practical disciplines. Some fields, such as computational complexity theory (which explores the fundamental properties of computational and intractable problems), are highly abstract, while fields such as computer graphics emphasize real-world visual applications. Still other fields focus on the challenges in implementing computation. For example, programming language theory considers various approaches to the description of computation, while the study of computer programming itself investigates various aspects of the use of programming language and complex systems. Human–computer interaction considers the challenges in making computers and computations useful, usable, and universally accessible to humans.

Ada Lovelace is credited with writing the first algorithmintended for processing on a computer.

Ada Lovelace is credited with writing the first algorithmintended for processing on a computer.

The earliest foundations of what would become computer science predate the invention of the modern digital computer. Machines for calculating fixed numerical tasks such as the abacus have existed since antiquity, aiding in computations such as multiplication and division. Further, algorithms for performing computations have existed since antiquity, even before sophisticated computing equipment were created. The ancient Sanskrit treatise Shulba Sutras, or "Rules of the Chord", is a book of algorithms written in 800 BCE for constructing geometric objects like altars using a peg and chord, an early precursor of the modern field of computational geometry.

Blaise Pascal designed and constructed the first working mechanical calculator, Pascal's calculator, in 1642. In 1673 Gottfried Leibniz demonstrated a digital mechanical calculator, called the 'Stepped Reckoner'. He may be considered the first computer scientist and information theorist, for, among other reasons, documenting the binary number system. In 1820, Thomas de Colmarlaunched the mechanical calculator industry[note 1] when he released his simplified arithmometer, which was the first calculating machine strong enough and reliable enough to be used daily in an office environment. Charles Babbage started the design of the first automatic mechanical calculator, his difference engine, in 1822, which eventually gave him the idea of the first programmable mechanical calculator, his Analytical Engine. He started developing this machine in 1834 and "in less than two years he had sketched out many of the salient features of the modern computer. A crucial step was the adoption of a punched card system derived from the Jacquard loom" making it infinitely programmable. In 1843, during the translation of a French article on theanalytical engine, Ada Lovelace wrote, in one of the many notes she included, an algorithm to compute the Bernoulli numbers, which is considered to be the first computer program. Around 1885, Herman Hollerith invented the tabulator, which used punched cardsto process statistical information; eventually his company became part of IBM. In 1937, one hundred years after Babbage's impossible dream, Howard Aiken convinced IBM, which was making all kinds of punched card equipment and was also in the calculator business to develop his giant programmable calculator, the ASCC/Harvard Mark I, based on Babbage's analytical engine, which itself used cards and a central computing unit. When the machine was finished, some hailed it as "Babbage's dream come true.

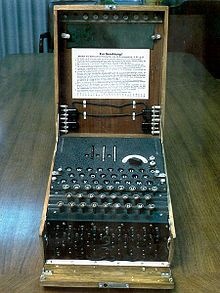

The German military used theEnigma machine (shown here) duringWorld War II for communication they thought to be secret. The large-scale decryption of Enigma traffic at Bletchley Park was an important factor that contributed to Allied victory in WWII.

The German military used theEnigma machine (shown here) duringWorld War II for communication they thought to be secret. The large-scale decryption of Enigma traffic at Bletchley Park was an important factor that contributed to Allied victory in WWII.

These contributions include:

- The start of the "digital revolution", which includes the current Information Age and the Internet.[19]

- A formal definition of computation and computability, and proof that there are computationally unsolvable and intractableproblems.[20]

- The concept of a programming language, a tool for the precise expression of methodological information at various levels of abstraction.[21]

- In cryptography, breaking the Enigma code was an important factor contributing to the Allied victory in World War II.[18]

- Scientific computing enabled practical evaluation of processes and situations of great complexity, as well as experimentation entirely by software. It also enabled advanced study of the mind, and mapping of the human genome became possible with the Human Genome Project.[19] Distributed computing projects such as Folding@home exploreprotein folding.

- Algorithmic trading has increased the efficiency and liquidity of financial markets by using artificial intelligence, machine learning, and other statistical and numerical techniques on a large scale.[22] High frequency algorithmic trading can also exacerbate volatility.[23]

- Computer graphics and computer-generated imagery have become ubiquitous in modern entertainment, particularly intelevision, cinema, advertising, animation and video games. Even films that feature no explicit CGI are usually "filmed" now on digital cameras, or edited or postprocessed using a digital video editor.[citation needed]

- Simulation of various processes, including computational fluid dynamics, physical, electrical, and electronic systems and circuits, as well as societies and social situations (notably war games) along with their habitats, among many others. Modern computers enable optimization of such designs as complete aircraft. Notable in electrical and electronic circuit design are SPICE, as well as software for physical realization of new (or modified) designs. The latter includes essential design software for integrated circuits.[citation needed]

- Artificial intelligence is becoming increasingly important as it gets more efficient and complex. There are many applications of the AI, some of which can be seen at home, such as robotic vacuum cleaners. It is also present in video games and on the modern battlefield in drones, anti-missile systems, and squad support robots.